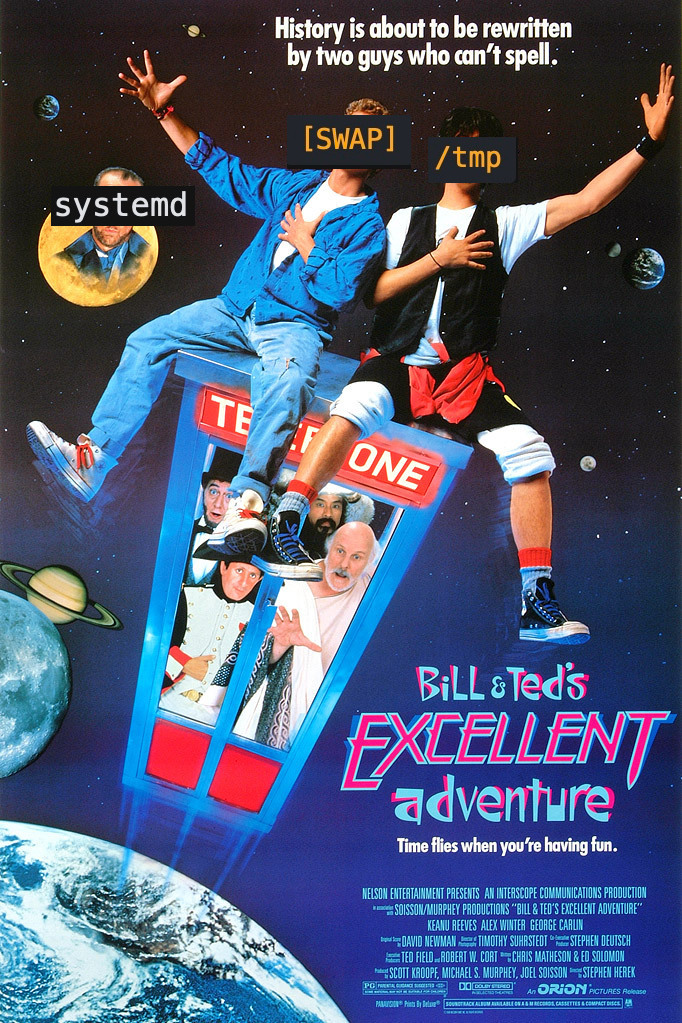

Swap and /tmp's Ephemeral Adventure

Recently, I was configuring a server environment on EC2 for running a web application. I had the following requirements, with respect to the use of the single instance store volume available (this was using a c5d instance type).

- Using a partition on the instance store volume, allow the system to have 8GB of swap, to give plenty of headroom in case we run out of memory.

- Using another partition on the instance store volume, mount the

/tmpdirectory to the instance store, for ephemeral file storage. We’ll also make this 8GB. - Allow this to work with rebooting/restarting the instance, and creating subsequent AMIs based off of it. Instance storage volumes are discarded when the instance is stopped or terminated, so we’ll need a way to ensure swap and

/tmpare setup and mounted correctly in these cases.

AWS has a lot of helpful documentation, but everything I found didn’t quite address my use case, (like this article on setting up swap on a partition, or this article on mounting instance store volumes to directories).

I ended up going down quite the rabbit hole, with different configurations coming close, but then resulting in frustration. You’d think these ephemeral storage needs would be a common…

In this post, I’ll outline how I ended up fulfilling these ephemeral storage requirements on a c5d instance type with Amazon Linux 2, using simple systemd services. The services will run on boot and do the following, in order:

- Create two 8GB partitions on the instance store volume for swap and

/tmp, if they don’t already exist. - Mount swap

- Mount

/tmp

Setting up partitions on the instance store volume

First, we’ll create a systemd service that will create the partitions for swap and /tmp.

Let’s add a simple “partitions” systemd service:

$ sudo vim /etc/systemd/system/partitions.service

And we’ll save the file with the following definition for our service:

[Unit]

Description=Partitions service

[Service]

ExecStart=/usr/sbin/partitions.sh

[Install]

WantedBy=multi-user.target

This will run a /usr/sbin/partitions.sh script on boot.

The c5d instance type is one among many in its generation that uses the Nitro System. This supposedly offers all sorts of great performance benefits, for which I’m genuinely grateful and very excited (🔥nItrO sYsTem🔥). But an annoying feature of Nitro System storage devices is that device names will change underneath you. For example, a device named /dev/nvme1n1 when you first initialize your instance may be /dev/nvme1n2 after rebooting 🙄.

To get around this naming issue we’ll need to install the nvme-cli package, which will help us get the device name.

sudo yum install nvme-cli

Now, let’s create the /usr/sbin/partitions.sh script that will be run by our partitions systemd service:

sudo vim /usr/sbin/partitions.sh

sudo chmod 700 /usr/sbin/partitions.sh

We’ll add the following to the script:

#!/bin/bash

# This will be the path for the instance store device on the instance.

# When first initializing the instance, this should be /dev/nvme1n1.

# After a reboot, this is likely to change and get incremented to something like /dev/nvme1n2.

instance_store_disk_path=$(sudo nvme list | grep 'Amazon EC2 NVMe Instance Storage' | grep -Po -m 1 '\/dev\/nvme\d{1}n\d{1}');

# We setup partition 2 for swap.

swap_partition_path="$instance_store_disk_path"p2;

# We setup partition 3 for /tmp.

tmp_partition_path="$instance_store_disk_path"p3;

# Do the partitions already exist?

# That will be the case when rebooting, but NOT when starting from

# a stopped instance or initializing from an AMI.

swap_partition_exists=$(lsblk -p | grep $swap_partition_path);

tmp_partition_exists=$(lsblk -p | grep $tmp_partition_path);

if [[ ! $swap_partition_exists ]]; then

# Create partition for swap with fdisk

# In order the options given here are:

# n : create a new partition

# p : primary type

# 2 : partition number

# (enter) : default "First sector" (the first free sector)

# +8GB : size of the partition

(echo n; echo p; echo 2; echo -ne "\n"; echo '+8GB'; echo w) | sudo fdisk $instance_store_disk_path;

fi

if [[ ! $tmp_partition_exists ]]; then

# Create partition for /tmp with fdisk

# In order the options given here are:

# n : create a new partition

# p : primary type

# 3 : partition number

# (enter) : default "First sector" (the first free sector)

# +8GB : size of the partition

(echo n; echo p; echo 3; echo -ne "\n"; echo '+8GB'; echo w) | sudo fdisk $instance_store_disk_path;

fi

# Inform the OS of the partition change

sudo partprobe;

The comments in the above script explain each step, but in order we:

- Retrieve the name of the instance store volume

- Assign variables for the names of partitions 2 and 3.

- If the swap partition doesn’t exist, we create it with

fdisk. - If the

/tmppartition doesn’t exist, we create it withfdisk. - We inform the system of the new partitions with

partprobe.

I came across this non-interactive approach to using fdisk at this Ask Ubuntu answer. It seemed funny to me at first, but I found this to be a more user-friendly option for creating the partitions than parted or sfdisk.

Now we’ll load the partitions service, enable it so it starts on boot, and start it now:

$ sudo chmod 664 /etc/systemd/system/partitions.service

$ systemctl daemon-reload

$ systemctl enable partitions.service

$ systemctl start partitions.service

You should now be able to view the two new partitions with $ lsblk.

Mounting swap on partition 2

Now, we’ll create a swap systemd service that will run after the partitions service and mount swap to partition 2.

$ sudo vim /etc/systemd/system/swap.service

The service will look like the following. Note the After=partitions.service option:

[Unit]

Description=Swap service

After=partitions.service

[Service]

ExecStart=/usr/sbin/swap.sh

[Install]

WantedBy=multi-user.target

And now let’s similarly add a swap.sh script:

sudo vim /usr/sbin/swap.sh

sudo chmod 700 /usr/sbin/swap.sh

#!/bin/bash

# The instance store volume uses a NVMe-based path, which

# can change on reboots. We use the nvme-cli package tool to retrieve the name of the device.

instance_store_disk_name=$(sudo nvme list | grep 'Amazon EC2 NVMe Instance Storage' | grep -Po -m 1 'nvme\d{1}n\d{1}');

# Using partition 2 for swap

swap_partition_path=/dev/"$instance_store_disk_name"p2;

# The partprobe command in the partitions.sh script is by nature asynchronous.

# There's a chance we'll get here before the kernel recognizes the partition as mounted.

# Wait until the partition is mounted, then move on.

while [ ! -e $swap_partition_path ]; do sleep 1; done

# Disable swap

sudo swapoff -a

# Setup the linux swap area

sudo mkswap $swap_partition_path;

# Enable swap

sudo swapon $swap_partition_path;

In the script above, we’re doing the following:

- Retrieving the name of the instance store volume

- Assigning a variable to the swap partition name.

- Since

partprobeis asynchronous, we have to wait to make sure the partition is mounted, so wesleepuntil that’s the case. - Disable swap with

swapoff, in case it’s already enabled. - Setup the swap area on the partition with

mkswap. - Enable swap with

swapon.

Now let’s load the new swap systemd service, enable it, and start it now:

$ sudo chmod 664 /etc/systemd/system/swap.service

$ systemctl daemon-reload

$ systemctl enable swap.service

$ systemctl start swap.service

You should now be able to verify that swap is available with $ top and you’ll see the partition mounted for [SWAP] with $ lsblk.

Mounting /tmp on partition 2

Let’s add our third and final systemd service, to mount /tmp to partition 3.

$ sudo vim /etc/systemd/system/partition.service

The service will look like the following. Note the After=swap.service option:

Description=tmp service

After=swap.service

[Service]

ExecStart=/usr/sbin/tmp.sh

[Install]

WantedBy=multi-user.target

And now let’s add a tmp.sh script:

sudo vim /usr/sbin/tmp.sh

sudo chmod 700 /usr/sbin/tmp.sh

#!/bin/bash

# The instance store volume uses a NVMe-based path, which

# can change on reboots. We use the nvme-cli package tool to retrieve the name of the device.

instance_store_disk_name=$(sudo nvme list | grep 'Amazon EC2 NVMe Instance Storage' | grep -Po -m 1 'nvme\d{1}n\d{1}');

instance_store_disk_path=/dev/"$instance_store_disk_name";

# Using partition 3 for /tmp

tmp_partition_path="$instance_store_disk_path"p3;

tmp_partition_name="$instance_store_disk_name"p3;

# If /tmp is already listed as a mounted blocked device by lsblk, then there's nothing to do.

# This will be the case on reboots.

tmp_partition_mounted=$(lsblk | grep tmp);

if [[ ! $tmp_partition_mounted ]]; then

# We are either booting from an AMI or a stopped instance,

# since /tmp is not mounted.

# The partprobe command in the partitions.sh script is by nature asynchronous.

# There's a chance we'll get here before the kernel recognizes the partition as mounted.

# Wait until the partition is mounted, then move on.

while [ ! -e $tmp_partition_path ]; do sleep 1; done

# Make file system

sudo mkfs.ext4 $tmp_partition_path

# Mount /tmp

sudo mount $tmp_partition_path /tmp

# Ensure permissions are what we want for /tmp

sudo chmod 1777 /tmp

fi

In the above we accomplish the following:

- Again, we use nvme-cli to retrieve the name of the instance store volume.

- We assign variables to the partition path and name, which we’ll need for the following commands.

- If the partition is already mounted, we don’t do anything. This will be the case on reboots, since rebooting leaves the instance storage volume attached.

- Similar to the swap service script, we have a

sleepto ensure the partition is available. - We make the file system on the partition path.

- We mount to

/tmp. - Set the desired permissions for

/tmp

Now, let’s load, enable, and start the permissions for the new tmp service:

$ sudo chmod 664 /etc/systemd/system/tmp.service

$ systemctl daemon-reload

$ systemctl enable tmp.service

$ systemctl start tmp.service

You should now be able to verify the partition is mounted at /tmp with $ lsblk.

The End of the Ephemeral Storage Journey

We’re now all set! You should now be able to enjoy an 8GB partition for swap and an 8GB partition for /tmp on the same instance store volume. You’ll be free to reboot, stop/start the instance, and initialize from AMIs without any hiccups.